At A to Z Computers, we aim to offer top-notch tech solutions for your digital needs. We cover everything from computer science theory to complex algorithms and more (Reference1). Our team is always innovating, leading the way in tech research and development.

Technology is changing fast, and staying ahead is key. We use the latest in computer science to drive innovation. Our experts are always exploring new ideas to shape the future of computing.

Looking into computer architecture is a big part of what we do. We study how computers work to make them better. This helps us improve performance and make technology easier to use.

ASCII is a big deal in computer communication. It helps computers talk to each other by encoding characters. A to Z Computers uses ASCII to make sure info flows smoothly across different devices.

Computer science goes beyond just theory and talking to computers. It also includes using game theory and algorithms for better decision-making. This helps us solve complex problems in many areas, from economics to AI.

Computational biology is a growing field that combines computer science and biology. At A to Z Computers, we use tech to analyze biological data and improve healthcare. Our team is dedicated to making a difference in healthcare through technology.

Computer science also meets physics in exciting ways. We explore how physics can help improve computing. By mixing these two fields, we aim to make groundbreaking discoveries and open new doors in tech.

Memory is crucial for computers. We’re always innovating in memory technology at A to Z Computers. From RAM to modern memory solutions, we keep our clients ahead with the latest tech.

The CPU is the brain of a computer, handling tasks and instructions. We focus on making CPUs better at A to Z Computers. By improving CPU design, we boost computing speed and efficiency.

Good input and output systems are key for computing. We work on making these systems better at A to Z Computers. Our goal is to make technology easy and intuitive for everyone.

Software and firmware are essential for computers to work well. At A to Z Computers, we’re always improving these areas. We aim for better functionality, security, and innovation in our solutions.

We believe there’s no limit to what technology can achieve at A to Z Computers. By pushing boundaries, we drive change and empower people and businesses. Join us as we explore the endless possibilities of technology.

Key Takeaways

- A to Z Computers offers comprehensive technology solutions in various fields of theoretical computer science, computational complexity, algorithms and data structures, parallel and distributed algorithms and architectures, and more (Reference1).

- Our expertise spans computer architecture, ASCII communication, algorithmic game theory, computational biology, physics-computation intersection, computer memory, central processing units (CPUs), computer input and output, and software and firmware development.

- A to Z Computers is committed to pushing the boundaries of technology, harnessing the latest advancements, and delivering innovative solutions that drive success.

- We prioritize seamless communication with the help of ASCII encoding and facilitate strategic decision-making through algorithmic game theory.

- By integrating computational biology, physics, and computation, A to Z Computers advances healthcare and explores new frontiers in computing.

Exploring Theoretical Computer Science

Theoretical computer science covers many key concepts that are vital for modern computing. It looks at the math behind computing and sets the stage for many computer science areas. Key topics include computational complexity, algorithms, and data structures, which we’ll look at closely.

Computational complexity looks at how efficient algorithms are and if they can be solved. It sorts problems by how hard they are and what resources they need. For example, the P versus NP question looks at easy and hard problems in solving.

Algorithms are steps to solve specific problems or tasks. They are the core of computer programs and their design is key for solving problems well. By studying algorithms, scientists can find better ways to solve complex issues.

Data structures help organize and store data in computers. They are crucial for handling and finding data efficiently. Each data structure has its own strengths and weaknesses, making it important to know them for better algorithm performance.

Let’s see why theoretical computer science is important with some stats. The first source2 shows it deals with functions or sequences, not just yes/no questions or numbers. It also tells us the Busy Beaver function is uncomputable, showing the limits of computation.

Now, let’s see how theoretical computer science applies in school. The second source3 says TU Berlin’s Computer Science program has at least four Theoretical Computer Science modules. Each is worth 6 ECTS and covers topics like formal languages and logic.

The formal languages and automata module teaches about language structure and how to recognize languages with machines. It’s key for understanding language in computer science.

The logic module teaches about logic and how to solve problems with it. It covers translating words into formal structures and solving algorithms. Logic is the base of reasoning, helping computer scientists solve problems logically.

The computability and complexity module looks at what can and can’t be computed in IT systems. It covers the halting problem and how to analyze algorithms’ effort and memory use. This helps scientists design better algorithms.

After core modules, students can pick a special module in theoretical computer science. This lets them focus on areas they’re interested in, deepening their knowledge in the field.

Studying theoretical computer science helps us understand the math that makes computing work. It lets us solve complex problems, design efficient algorithms, and improve data structures. The skills from this study are crucial for advancing computer science.

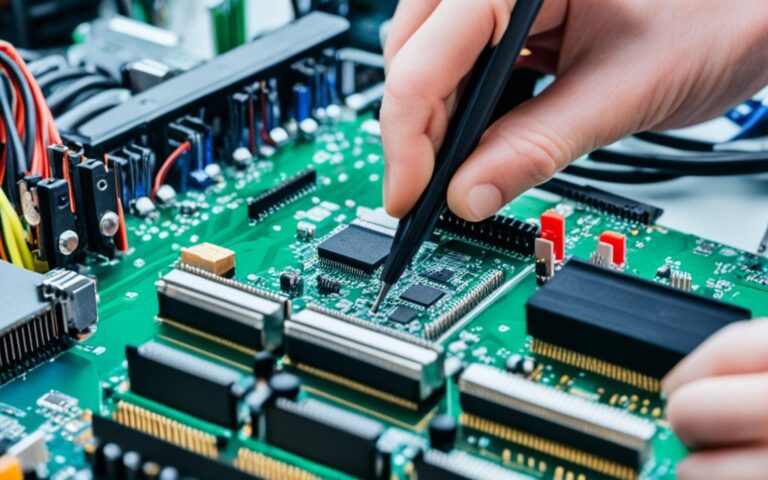

Understanding Computer Architecture

Computer architecture is the basic structure of computer systems. It covers how hardware parts work together and how software and hardware interact. It’s key for those in computer science, engineering, and hardware development. It shapes how a computer works and its performance.

At its heart, computer architecture has many parts that work well together. The CPU is a key part, with registers, an ALU, and control circuits4. Registers hold data being processed, the ALU does math and logic, and the control unit makes sure instructions are followed correctly. The first microprocessor, the Intel 4004, put all these parts on one chip4.

There are different types of computer architecture, each with its own features. CISC processors have a few registers but many commands, making code shorter but slower4. RISC processors have fewer commands but are faster, needing more code4.

The memory system is crucial in computer architecture. It includes RAM for temporary data and ROM for permanent instructions4. Hard disk drives and solid-state drives store lots of data4.

Computer architecture has layers that work together. These include hardware, software, the operating system, and the user interface5. The hardware includes the CPU and peripherals. Software has system and application parts. The operating system manages the system and files, and the user interface helps users interact with the computer5.

This layered structure allows for specialization and innovation in computer science. Each layer is important for smooth computer operation5. The hardware provides the base, the operating system manages operations, and the user interface makes things easy to use5.

Knowing about computer architecture is crucial for computer users, from programmers to system admins. It helps understand how computers work and their parts. This knowledge lets professionals improve system performance and create better software.

The Importance of ASCII in Computer Communication

ASCII (American Standard Code for Information Interchange) is key in computer communication. It’s a basic character encoding standard6. Created in 1963 by the American National Standards Institute6, ASCII uses 7-bit integers to represent text. This includes printable characters, control codes, and special symbols7. The Internet Engineering Task Force (IETF) adopted it in 19696.

ASCII’s 7-bit encoding made it great for storing and sending data6. It was used by most computers, but some IBM mainframes used EBCDIC instead6. ASCII could only handle 128 code points and 95 printable characters7. Yet, it was perfect for programming, making text handling easier with its specific character codes for letters and numbers6.

ASCII was updated to extended ASCII with 256 characters in 19818. But, it still didn’t cover all world languages8. Unicode came along as a solution8. It works with ASCII and supports more characters and languages, with 149,186 characters in the latest version8.

ASCII’s role in computer communication is huge. It set the standard for character encoding, making text and data exchange consistent6. Though Unicode has taken over for the internet, ASCII is still crucial in computing8. Its effect on computing and information sharing is huge.

The Role of Algorithmic Game Theory in Computing

Algorithmic game theory is a blend of game theory and computer science. It looks at algorithms and how they solve strategic problems. This field is key in the computing world, helping with strategic decisions and outcomes.

John Nash, a Nobel laureate, greatly influenced this field. He won the Nobel Prize in Economics in 1994. His work showed that any two-person game has at least one equilibrium9. This discovery led to more research and growth.

The Lemke-Howson Algorithm is a major tool in this field. It aims to find Nash Equilibria, the best strategies for players. However, it uses the Simplex Method, which isn’t quick9.

The internet has made algorithmic game theory more popular in the last decade. It brings new challenges and chances for strategic thinking9. Now, it’s a crucial area where computer science and economics meet, leading to new discoveries.

Finding Nash Equilibria is hard, though. The Brouwer Fixed Point Theorem makes it tough9. These problems are in the NP-Complete class, meaning they’re very complex9. This shows how important algorithmic game theory is for finding efficient solutions.

Dealing with these complex problems is a big challenge. The Parity Argument shows how hard it is to solve them9. New methods and algorithms are needed for effective decision-making.

Algorithmic game theory helps us understand strategic situations by using algorithms. It helps players make smart choices by looking at different scenarios and what others might do next10. It balances computer science and game theory to solve complex problems efficiently10.

In summary, algorithmic game theory changes how we make strategic decisions in computing. It uses advanced algorithms and models to analyze and improve strategic outcomes. This field is key for solving complex problems efficiently910.

| Key Contributions | Statistical Data |

|---|---|

| John Nash’s Nobel Prize | Winning the Nobel Prize in Economics in 1994 for proving the existence of Nash Equilibria in two-person games with a finite number of pure strategies9 |

| Internet’s Influence | The emergence of the internet has sparked increased interest and research in algorithmic game theory over the past 10 years9 | Lemke-Howson Algorithm | The LH Algorithm, which uses the Simplex Method, is designed to find Nash Equilibria but is known to not run in polynomial time9 |

| Computational Complexity | The computational complexity of finding Nash Equilibria, with difficulties arising from the Brouwer Fixed Point Theorem’s topology challenges9 |

| NP-Complete Problems | Finding Nash Equilibria falls under the category of NP-Complete problems, highlighting their complexity in algorithmic computations9 |

| Intersection of Disciplines | Algorithmic game theory bridges theoretical computer science and economics, leveraging insights from the global market and the internet9 |

| Strategic Decision-Making | Algorithmic game theory allows parties to make rational decisions by evaluating possibilities and estimating competitors’ moves10 |

| Efficient Problem Solving | Algorithmic game theory aims to find a balance between computer algorithms and game theory, enabling effective problem-solving10 |

The Advancements in Computational Biology

Computational biology has changed how we study and understand biological systems. It combines biology and computer science. This field uses algorithms and computational methods to analyze data, model processes, and make predictions.

Molecular computation is a key part of this field. It uses molecular biology to create tiny computers. These computers can do complex tasks using molecules as their building blocks.

Algorithms are crucial in computational biology. They help researchers handle and analyze big biological data. This includes DNA sequences or protein structures, helping to find patterns and insights.

Reference11 shows that new discoveries in computational biology come from three main areas. Electrophysiology helps us understand cell electrical properties. Artificial intelligence finds complex patterns in data, aiding in health and drug research. Cerebral organoids mimic the human brain, helping study brain diseases.

Computational biology is a key player in integrating computer methods with biological studies (reference12). It started with the Human Genome Project’s data explosion. Now, it includes bioinformatics, mathematical modeling, and machine learning.

Reference12 points out that bioinformatics is vital in analyzing genomes and predicting protein structures. Computational tools and algorithms help researchers find insights in genomic data.

Mathematical modeling adds an abstract layer to computational biology (reference12). It uses equations and computation to simulate biological processes. This helps researchers understand complex phenomena.

Machine learning algorithms are key in computational biology (reference12). They find patterns in data, aiding in health and drug research.

Despite progress, computational biology faces challenges (reference12). Handling different data types and improving analysis are tough tasks. Ensuring the ethical use of genomic data is also crucial.

Looking forward, computational biology is promising (reference12). The use of artificial intelligence and deep learning will drive more progress. Personalized medicine could change healthcare by tailoring treatments to each patient’s genes.

The Intersection of Physics and Computation

The blend of physics and computation is fascinating. It looks at how physical systems and computation work together, especially in quantum computing. Quantum computing uses quantum mechanics to do complex tasks that regular computers can’t.

Quantum computing is based on quantum mechanics, a part of physics that explains tiny particles. In this field, information is handled by quantum bits, or qubits, which can be in more than one state at once. This lets qubits process lots of information at the same time, making calculations much faster13.

Entanglement is a key idea in quantum computing. It means qubits can be connected over long distances. This is important for quantum algorithms and secure communication13.

Quantum gates are crucial too. They change the states of qubits, making it possible to do different operations and solve problems faster13.

Some important quantum algorithms are Shor’s algorithm for factoring numbers and Grover’s algorithm for searching databases13.

Quantum physics helps make quantum technologies like superconducting qubits and trapped ions. These are used to create quantum computers. They need to be very precisely made and kept very cold to work right13.

Quantum computing has many uses in physics. It can change how we simulate quantum systems, helping with materials and chemistry research. It also helps in quantum field theory, cosmology, and other physics areas13.

By using quantum computing, physicists can make better predictions, control quantum states, and solve hard physics problems. Quantum algorithms help us explore and understand the universe better13.

In summary, combining physics and computation, especially with quantum computing, brings new ways to understand the world and increase what we can do with computers.

The Evolution of Computer Memory

Computer memory has changed a lot over the years, from punch-card systems to today’s advanced technologies. Understanding how memory has developed helps us see how much computing has advanced. Let’s look at the exciting journey of computer memory and its importance in technology.

The Early Innovations

The story of computer memory starts with pioneers like Herman Hollerith, Vannevar Bush, and Alan Turing. In 1890, Hollerith’s punch-card system changed data processing, saving the U.S. government time and money. This led to IBM’s start14. Bush’s Differential Analyzer, made in 1931, was a big step in computer design14. Turing’s work in 1936 influenced modern computer design, laying the groundwork for memory technology14.

The Emergence of Digital Memory

As computers got better, the need for good memory storage grew. In 1939, Hewlett Packard started, and in 1941, Konrad Zuse built the world’s first digital computer, the Z3. The Z3 was lost in World War II, but Zuse then made the Z4, the first commercial digital computer in 195014. The Atanasoff-Berry Computer (ABC), made in 1941, was the first digital computer in the U.S. that could store and perform tasks14.

The Rise of Electronic Memory

In the mid-20th century, electronic memory changed computers. In 1945, the ENIAC, the first electronic digital computer, was built14. Then, the EDSAC in 1949 at Cambridge led to Australia’s first digital computer, the CSIRAC14.

The Birth of Integrated Circuits

The late 1950s and early 1960s saw a big change with integrated circuits. Jack Kilby and Robert Noyce introduced the integrated circuit in 1958, making memory smaller and more powerful14. This led to more innovations, like Ethernet by Robert Metcalfe in 197314.

The Era of High-Capacity Storage

As data storage needs grew, so did the solutions. In 1980, the first gigabyte hard disk drive came out, a big step in storage15. The first laserdisc system in 1978 and magnetic tape and compact cassette storage also made storing lots of data easier15.

The Future of Computer Memory

Computer memory is still evolving, with new advancements in primary and secondary storage. From punch-cards to today’s high-capacity devices, memory technology has greatly improved computing speed and efficiency1415. It’s exciting to think about what the future holds for computer memory and its impact on computing.

| Year | Memory Milestone | Reference |

|---|---|---|

| 1890 | Herman Hollerith’s punch-card system | 1 |

| 1931 | Vannevar Bush’s Differential Analyzer | 1 |

| 1936 | Alan Turing’s principle of the Turing machine | 1 |

| 1939 | HP founded in Palo Alto, California | 1 |

| 1941 | Konrad Zuse completes the Z3 machine | 1 |

| 1941 | Atanasoff-Berry Computer (ABC) designed | 1 |

| 1945 | Electronic Numerical Integrator and Calculator (ENIAC) built | 1 |

| 1949 | First practical stored-program computer (EDSAC) | 1 |

| 1958 | Integrated circuit unveiled | 1 |

| 1973 | Ethernet developed by Robert Metcalfe | 1 |

| 1980 | Introduction of the first gigabyte hard disk drive | 3 |

The Function of Central Processing Units (CPUs)

The CPU is the heart of a computer, running programs and doing complex math. It has key parts like registers, an ALU, and a control unit.

Registers are quick storage areas in the CPU. They keep data and instructions ready for fast use. This makes the computer work faster.

The arithmetic logic unit (ALU) does math and logical tasks like adding and comparing. It’s great for handling numbers and making smart choices with the data it gets.

The control unit manages the CPU’s work. It makes sure data moves right between parts. It helps the ALU, registers, and other parts work together smoothly.

Now, CPUs often have many cores to boost speed and tackle more tasks at once. Most processors have up to 12 cores16. Some top computers have 12 cores, making them great for multitasking and complex tasks.

Multi-core CPUs have changed computing for the better. They split tasks across cores for faster, more efficient work. This means better performance and less energy use.

Also, multi-threading boosts performance in virtual machines16. It breaks tasks into smaller threads. This lets a CPU do many instructions at once, speeding up complex work.

The Role of Computer Input and Output

Input and output units are key in letting users talk to the computer and work with other devices17.

The input unit takes data from outside and sends it to the CPU for processing18. It uses peripherals like keyboards, mice, and touchscreens. These turn what we do into something the computer can understand.

Keyboards are a top way to input data. They come in many types, like those with 84 or 101 keys, each for different needs19.

Pointing devices like mice help move the cursor on the screen. This lets users interact with apps and games19. The first mouse was made in 1963, and now we have advanced optical mice.

Other devices include web cameras, scanners, and readers for different tasks like capturing images and reading data19.

The output unit shows the computer’s results, like text or images, to users19. Devices like monitors and speakers help with this.

Monitors show us what’s happening on the computer, from texts to videos17. They’re vital for work or fun.

Printers turn digital info into physical copies, like documents or photos19. They’re split into impact and non-impact types, with laser and inkjet being common.

Audio output is also key, giving us sound from speakers or headphones19. This lets us enjoy music, watch videos, or talk to others.

Projectors let us show big images for presentations or movies17. They’re great for sharing ideas or enjoying shows at home.

| Input Devices | Usage |

|---|---|

| Keyboard | Frequently used input device; various options available |

| Mouse | Precise cursor control and navigation |

| Web Cameras | Image and video capture |

| Scanners | Document and image scanning |

| Optical Mark Readers (OMR) | Recognition of marked data on documents |

| Optical Character Readers (OCR) | Text extraction from printed or handwritten documents |

| Magnetic Ink Card Readers (MICR) | Reading data from magnetic ink characters on cards |

| Output Devices | Usage |

|---|---|

| Monitor | Visual feedback and display |

| Printer | Transforming digital information into physical copies |

| Speaker | Audio output for various applications |

| Projector | Projection of visuals on large screens |

Input and output units work together to make computers useful for us. They let us use our computers fully, making our digital lives better.

The Significance of Computer Software and Firmware

Understanding the roles of software and firmware is key to how computers work. Software lets users do many tasks. Firmware connects the hardware and software, making everything work smoothly.

Software is made up of programs and data that tell a computer what to do. It includes system software, application software, and programming languages. This software is crucial for users to interact with computers and get things done.

System software includes operating systems like Windows and Linux. These systems manage the computer’s memory and other parts20. Windows is the most common operating system used on computers and mobile devices20.

Firmware is software that stays in a computer’s memory and starts the system when it turns on21. It’s put on the hardware when it’s made and controls how the device works21. Devices like smartphones and IoT gadgets need firmware to work right21.

Firmware updates are important for keeping devices running well. They fix bugs, improve security, add new features, and make media work better21. How often devices get updates depends on how they’re used and what they do21. Now, updates can happen over the air, making them easier and automatic21.

| Type of Firmware | Features and Integration Levels |

|---|---|

| Low-level firmware | Provides basic machine instructions for hardware functionality |

| High-level firmware | Enables advanced features and functionality |

| Subsystem firmware | Integrates with specific hardware subsystems for specialized tasks |

Firmware is in many technologies, from computers to cars and home appliances21. It gives instructions for hardware to work right, making apps run smoothly21. Firmware in flash memory is easier to update than other types21.

Software and firmware are both vital for modern computers to work well. They help everything talk to each other and make using computers better.

References:

- What is firmware?

- History of software

- What is system software?

Conclusion

A to Z Computers covers a wide range of fields and tech advances that shape our world. From the early mechanical Antikythera mechanism22 to the Z1 computer by Konrad Zuse23, and Tim Berners-Lee’s World Wide Web23, computer evolution is amazing.

Decades have seen changes from vacuum tubes to transistors, and the rise of personal computers and smartphones. These changes made computers more reliable and accessible to everyone. Now, devices like tablets, laptops, and smartphones change how we use technology23.

Today, we’re into artificial intelligence and quantum computing23. This means A to Z Computers has endless possibilities. As computers get more powerful, we’ll see more in fields like biology, gaming, and research2224. From the ancient Antikythera to today’s supercomputers, A to Z Computers offers endless potential. It’s a journey that could lead to a future full of excitement and change. Let A to Z Computers inspire you to explore new possibilities.

FAQ

What is A to Z Computers?

A to Z Computers covers many areas like theoretical computer science and computer architecture. It also includes computational biology and more. It looks at the theory, practical use, and new developments in technology.

What areas does theoretical computer science cover?

Theoretical computer science looks at the basics of computation. It includes topics like computational complexity, algorithms, and data structures. It’s all about the math behind how computers work.

What does computer architecture refer to?

Computer architecture is about the design of a computer system. It shows how all the parts work together. It’s what makes a computer process data and do what it’s supposed to do.

What is ASCII?

ASCII stands for American Standard Code for Information Interchange. It’s a way computers and devices use to send text. ASCII uses 7-bit numbers to show letters, numbers, and symbols.

What is algorithmic game theory?

Algorithmic game theory mixes game theory and computer science. It looks at how algorithms help solve problems in games and strategic situations. It’s about making smart choices in games and real life.

What is computational biology?

Computational biology uses computers to study and understand life sciences. It includes things like molecular computation and bioinformatics. It helps scientists analyze data and make predictions.

What does the intersection of physics and computation explore?

This area looks at how physics and computers work together. For example, quantum computing uses quantum mechanics for complex tasks. It’s much faster than regular computers.

What role does computer memory play in computer architecture?

Memory is key in computer architecture. It has two types: primary and secondary storage. Primary storage, like RAM, holds data while it’s being used. Secondary storage, like hard drives, keeps data long-term.

What is the function of the central processing unit (CPU)?

The CPU is the brain of a computer. It runs programs and has parts like registers and an arithmetic logic unit. The control unit manages data and instructions.

What do the input and output units of a computer system do?

These units let users talk to the computer. The input unit takes in data from things like keyboards. The output unit shows results, like on screens or printers.

What does computer architecture involve?

It’s about both the software and hardware of a computer. Software runs the show, while firmware is stored in the computer’s memory. Firmware helps start the computer when it turns on.

Source Links

- https://tek.com/en/documents/primer/oscilloscope-basics – Oscilloscope Basics

- https://scottaaronson.blog/?p=8106 – The Zombie Misconception of Theoretical Computer Science

- https://www.tu.berlin/en/eecs/academics-teaching/study-offer/masters-programs/what-is-theoretical-computer-science – What is Theoretical Computer Science?

- https://www.spiceworks.com/tech/tech-general/articles/what-is-computer-architecture/ – Computer Architecture: Components, Types and Examples – Spiceworks

- https://techfocuspro.com/what-are-the-four-main-layers-of-computer-architecture/ – What are the Four Main Layers of Computer Architecture? – Tech Focus Pro

- https://www.techtarget.com/whatis/definition/ASCII-American-Standard-Code-for-Information-Interchange – What is ASCII (American Standard Code for Information Interchange)?

- https://en.wikipedia.org/wiki/ASCII – ASCII

- https://www.investopedia.com/terms/a/american-code-for-information-interchange.asp – American Code For Information Interchange (ASCII) Overview

- https://www2.math.upenn.edu/~ryrogers/AGT.pdf – AGT

- https://www.upgrad.com/blog/what-is-the-algorithmic-game-theory/ – What Is the Algorithmic Game Theory? Explained With Examples | upGrad blog

- https://www.polytechnique-insights.com/en/columns/science/biocomputing-the-promise-of-biological-computingbrains/ – Biocomputing: the promise of biological computing – Polytechnique Insights

- https://www.omicsonline.org/open-access-pdfs/advancements-in-computational-biology-unraveling-the-mysteries-of-life.pdf – Advancements in Computational Biology: Unraveling the Mysteries of Life

- https://medium.com/@diya.disha.hiremath/bridging-the-gap-between-physics-and-computer-science-34527bd6a863 – Bridging the Gap Between Physics and Computer Science

- https://www.livescience.com/20718-computer-history.html – History of computers: A brief timeline

- https://www.pingdom.com/blog/the-history-of-computer-data-storage-in-pictures/ – The History of Computer Data Storage, in Pictures – Pingdom

- https://www.techtarget.com/whatis/definition/processor – What is processor (CPU)? A definition from WhatIs.com

- https://www.hp.com/th-en/shop/tech-takes/post/input-output-devices – Input and Output Devices Found in Computers

- https://builtin.com/hardware/i-o-input-output – What is I/O (Input/Output) in Computing? | Built In

- https://www.geeksforgeeks.org/input-and-output-devices/ – Input and Output Devices – GeeksforGeeks

- https://www.simplilearn.com/tutorials/programming-tutorial/what-is-system-software – What is System Software?

- https://www.techtarget.com/whatis/definition/firmware – What is Firmware? Definition, Types and Examples

- https://www.bricsys.com/blog/who-invented-computers – Who invented computers? | Bricsys Blog

- https://www.codingal.com/coding-for-kids/blog/evolution-of-computers/ – Evolution of Computers

- http://clubztutoring.com/ed-resources/math/computer-definitions-examples-6-7-6/ – Computer: Definitions and Examples – Club Z! Tutoring